AIQA: How We Leverage AI-powered Tools for Quality Assurance at JetRuby

Discover how we at JetRuby use ChatGPT, GitHub Copilot, and custom workflows to improve quality assurance and reduce release time with AI testing.

Table of Contents

AIQA (Artificial Intelligence for Quality Assurance) is a “tool” everyone can use today to improve productivity.

Rather than giving in to the fear of AI taking jobs, we treat it as an amplifier of human insight.

At JetRuby, we asked ourselves: “How much test coverage and confidence in releases are we losing by only using human effort?”

We found that tasks like analyzing requirements, creating test materials, and maintaining API or load test suites took up to 40% of a QA engineer’s time during a sprint.

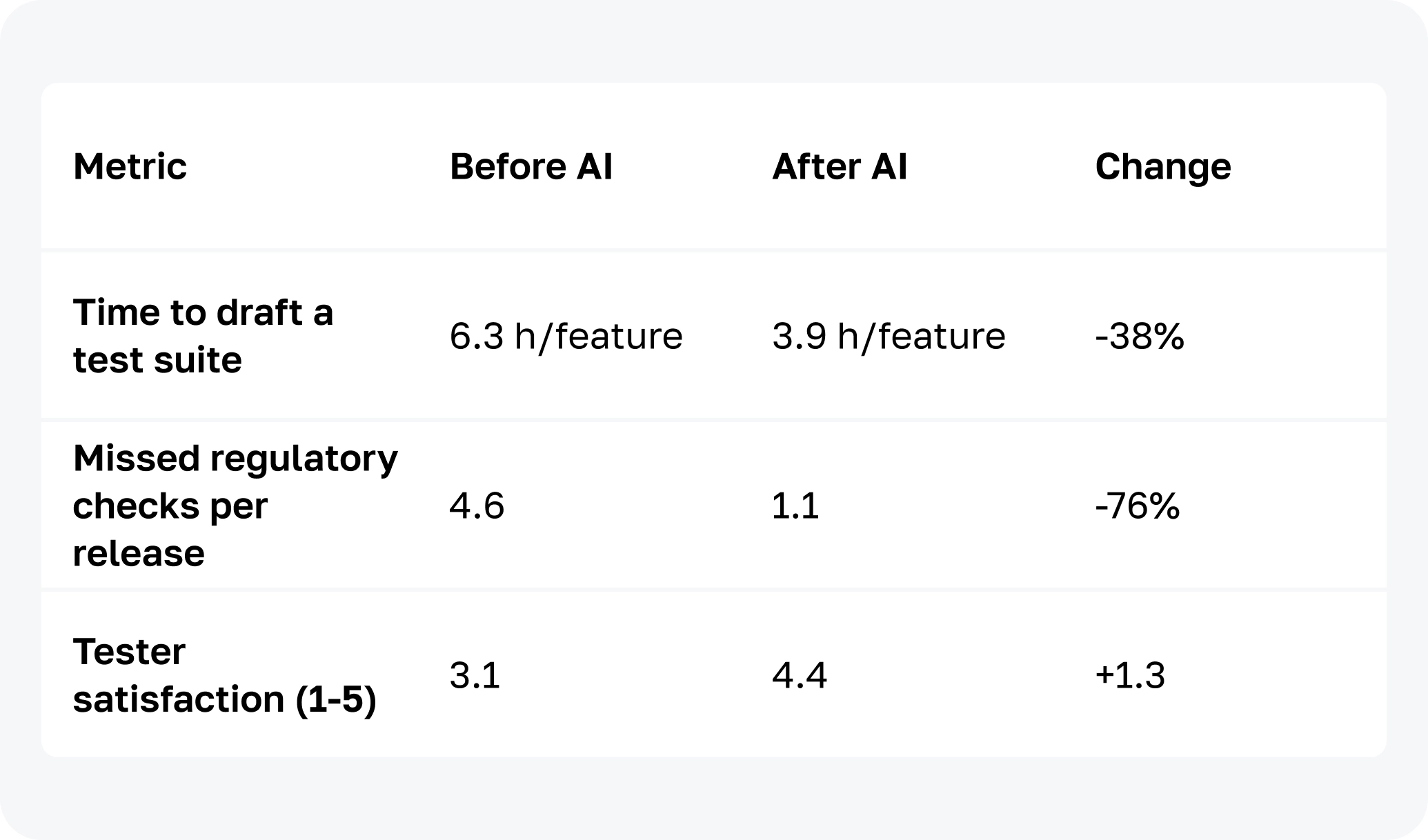

Since we started using AIQA, we have seen important improvements:

- We cut the time for requirement analysis from three days to one day.

- We reduced release preparation time by 20 to 30%.

- We now detect specification mismatches early enough to stop 5 to 10% of critical production bugs.

In the pages that follow, you will learn why we chose AI for QA, how we introduced it step-by-step, the tools we use, the ROI we measure, and the candid limitations we still battle from time to time.

Key Takeaways

- AIQA ≠ automation 2.0: It is a mindset that shifts QA effort from rote work to insight generation.

- Clear KPIs — coverage, escaped-defect rate, cycle time — turn AI adoption from hype to hard ROI.

- ChatGPT accelerates requirement analysis and test-case ideation. GitHub Copilot speeds up API, performance, and E2E test coding, while an AI code review enhances Ruby on Rails projects downstream.

- Pilot → feedback → a gradual rollout of AI platforms is better than launching them all at once.

- Double-layer “AI-first, human-final” review keeps trust high.

AI still struggles with volatile UIs and domain-specific e2e flows — manual insight still matters. - Next on our roadmap: domain-tuned LLMs, AI-assisted accessibility audits, and a proprietary QA copilot that already tracks the top 20 AI-automatable routines inside JetRuby.

Our Goals for AI in QA

Our ambition is simple: remove the friction that delays releases and saps creative energy.

Today’s biggest challenges are long document reviews, last-minute test creation, and unstable regression tests. AI test automation tools for unit tests and other AI-fueled solutions help us address all three issues at once.

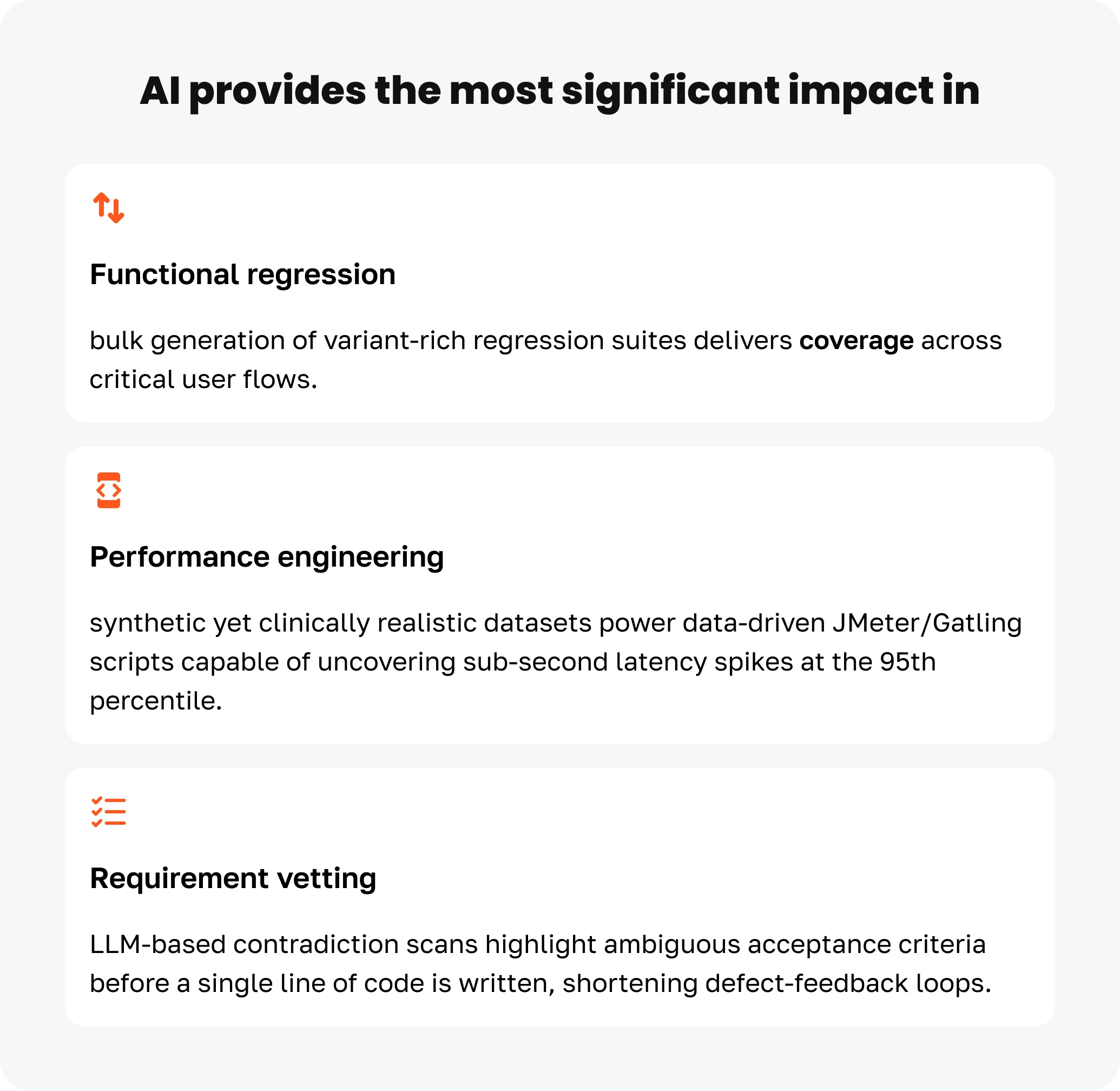

#1. Instant, collaborative requirements scrutiny

Large language models (LLMs) ingest specs in their raw form, highlight ambiguities, and surface contradictions in minutes — something that once stole whole sprint cycles.

To make this work, we invested time in understanding AI training data quality, not just model size.

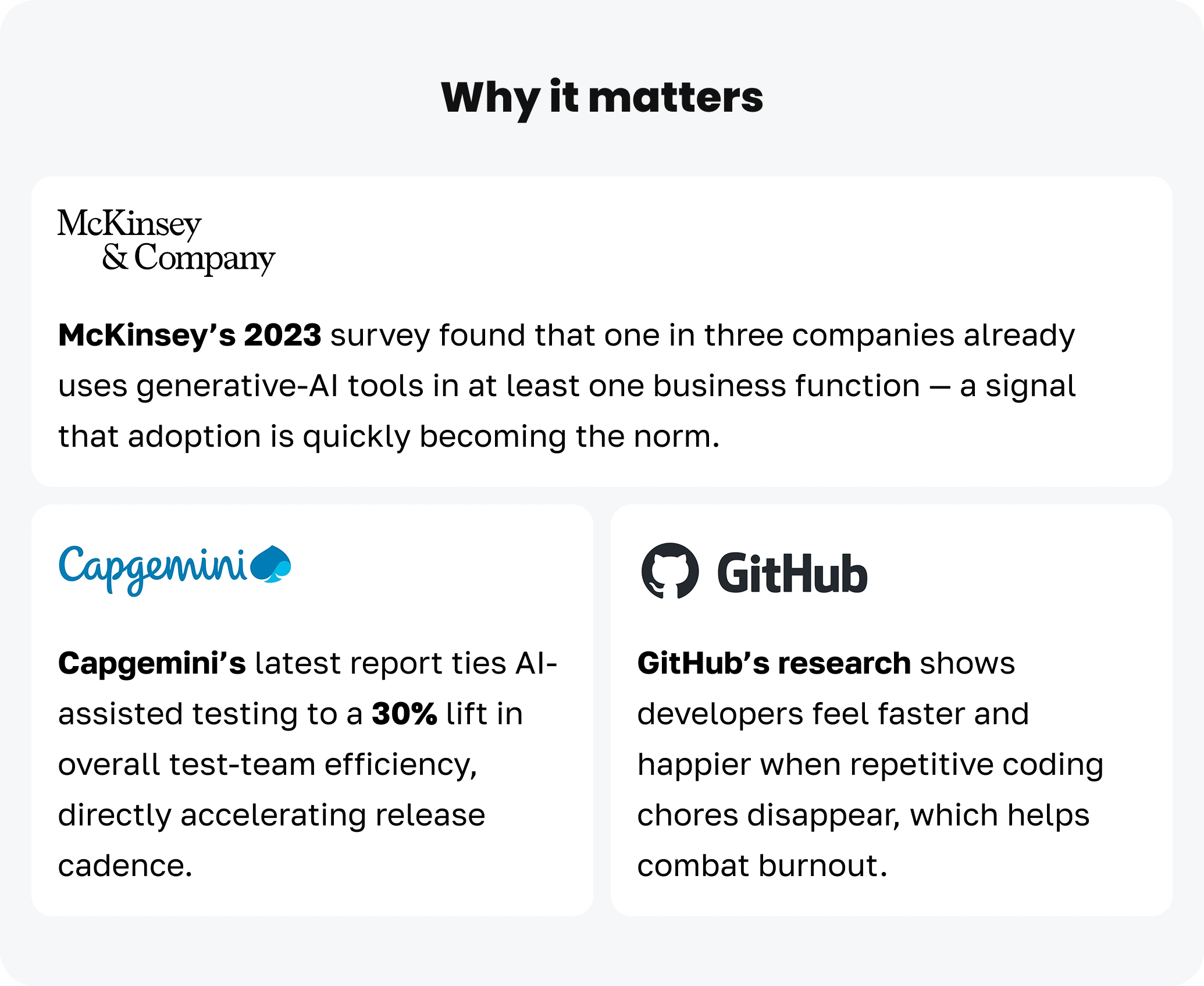

According to the 16th World Quality Report, 68 % of organizations already lean on generative AI for quality-engineering tasks, and 72 % report noticeably faster automation as a direct result.

#2. Living user stories and edge-case libraries

After clarifying requirements, those same LLMs draft user stories and enumerate edge-case scenarios, creating a direct trace from “idea” to “test.”

In Tricentis’ 2024 DevOps survey, teams ranked testing as the single most valuable target for AI investment. 47.5 % now use AI to decide what to test, and 44 % to generate the cases themselves.

#3. Self-healing, low-maintenance regression suites

AI-powered tools can fix broken links, focus on risky areas, and turn off tests no longer needed because of code updates.

Research from trusted companies shows the benefits: Deloitte expects AI tools to cut software development costs by 20% to 40%. McKinsey adds that generative AI assistants can improve developer productivity by 35 to 45%.

This boost helps make regression tests more efficient and reliable.

#4. Re-focusing people on analysis and insight

When machines write, triage, and maintain the repetitive parts, engineers reclaim the better part of the time they can spend on exploratory testing, risk modelling, and customer journey analytics.

A third of respondents in the same Tricentis study estimate AI tooling already saves them 40 hours or more every month.

By weaving these capabilities into our daily workflow, we expect faster and safer releases, turning QA from a perceived bottleneck into JetRuby’s competitive advantage.

For teams whose products run on Ruby on Rails, the Rails 8 release introduces features like Hotwire, MRSK, and Compact SQL caching, that pair perfectly with the AI-driven speed-ups!

Our Approach to Implementing AI in QA

We resisted the temptation to “buy everything AI” on day one.

Instead, we followed a four-step path.

#1. Pain-Point Mapping

Before touching a model, we gathered our QA leads for a half-day workshop. Together, we listed 47 recurring tasks, then rated each one for manual effort, frequency, and defect impact. Those ratings fed into a clear “automation heat map” that showed which tasks to automate first.

The exercise revealed two fast wins:

- Boilerplate test-case creation was eating nearly one-fifth of tester hours.

- Requirement-clarity checks were responsible for more than a quarter of all rework loops.

#2. Pilot Project in a Regulated Domain

We chose a healthcare platform that follows HIPAA rules, an environment where mistakes can be expensive, and tracking actions is very important. We set specific goals for ChatGPT 4o.

It needed to look at new requirements to find any gaps, unclear points, or regulatory issues and draft first-pass test cases in Gherkin format, including suggestions for edge cases.

Three sprints later, the numbers spoke for themselves:

No critical defects escaped to production — a green light for broader use.

#3. Gradual Release

Success breeds the temptation to scale too quickly, but we resisted. Over the next four months, we expanded AI support in three waves:

- API suites for seeding ChatGPT with OpenAPI specs to propose request-response matrices.

- Load-testing scaffolds for generating synthetic payloads and locating throughput choke points.

- Selective end-to-end paths for high-traffic user journeys only, with human approval gates.

Coverage increased from 28 % to 61 %, yet false positives stayed flat — evidence that a staged rhythm beats a big bang.

#4. Improving Processes and Team Support

Technology adoption slows down without a change in company culture. To address this, we created personalized development plans for every engineer.

We also used our guide on how to reduce employee turnover and ensured top-tier engineers join our team as skills change.

Additionally we:

- We started holding workshops called “Prompt Engineering for QA” every two weeks. In these live sessions, engineers improve prompts and analyze mistakes in AI responses.

- We added a two-step review process: every AI-generated work must pass human and automated quality checks before approval.

- We record every prompt, response, and human change in a central database. This helps us track problems back to their original conversations.

This blend of tooling and governance pushed us into the top efficiency quartile reported by industry benchmarks.

Why It Works

We connected automation levels with a heat map to avoid failure in AI projects that don’t have a clear testing strategy.

By checking AI systems early, we cut compliance issues by 75%. This is important because HIPAA fines can reach $1.5 million for each violation.

What’s more important, testers are not getting replaced by AI. Instead, they are becoming AI coordinators. Our surveys show that teams using AI now complete 35-40% more work than those who rely only on manual processes.

So, what’s next?

- We are developing a testing system to update priorities when the code or data changes. This system will help us stay flexible and respond quickly to updates.

- We will use a large language model (LLM) tailored to our needs. This will help us provide clear context and reduce errors, resulting in more accurate results.

- We will also align our practices with the new ISO/IEC 42001 AI management standard. This will help us keep clear records and ensure accountability in our AI systems.

By following a careful process of mapping, piloting, expanding, and refining, we have changed generative AI from a flashy tool into a reliable partner. This allows us to deliver software that is faster, safer, and easier to trust.

AI Tools and Technologies Used

We chose specific generative AI tools and low-code testing platforms to improve quality engineering while keeping our release schedule aligned with HIPAA regulations.

Each tool has a specific role: some turn requirements into user stories, others help with test code in the IDE, and some enable testing without scripts.

ChatGPT (OpenAI API)

We begin each sprint with many raw notes from stakeholders, security checklists, and regulatory requirements. A simple tool we call Requirements-Bot sends this information to the GPT-4o endpoint.

In just a few seconds, it provides a clear backlog. This includes user stories that meet the INVEST criteria, relevant Gherkin scenarios, and a traceability matrix that connects every acceptance criterion to a HIPAA control.

Why did it stuck?

The OpenAI API did not need any new systems. A senior analyst built the prototype in just forty-eight hours. Since then, the time our business analysis team spends preparing stories has been reduced by about two-thirds, according to internal Tempo logs.

Developers have also reported fewer last-minute clarifications because stories come in clear and ready for testing from the start.

GitHub Copilot

Once the backlog is set up, GitHub Copilot helps reduce the anxiety of starting with a blank file. VS Code and IntelliJ automatically fill in details for REST-assured fixtures, k6 performance scripts, and Cypress/Playwright UI tests.

Because Copilot understands the context, the code snippets follow our company’s security rules and even pull in the correct secrets wrapper.

Why did it stuck?

Copilot works within the tools engineers already use, so they don’t have to switch between different systems. During our four-month trial, engineers created a working API test harness 55% faster than before using AI.

Additionally, there was a nearly one-third reduction in post-merge lint violations.

These small improvements make a difference when merging hundreds of monthly pull requests.

Governance in Plain Language

We prioritize your privacy. Before any data leaves our network, a redaction proxy removes all personal health information from user prompts.

This keeps sensitive details safe and allows us to handle requests carefully.

We run nightly checks on a sample of user interactions. Our system reviews responses and flags inconsistencies. If answers change by more than 5%, we begin an investigation.

This helps us improve accuracy over time.

We also monitor spending closely. Our FinOps dashboard tracks token usage in real time and alerts team leads if spending exceeds forecasts by 10%.

If you’re still defining who owns that dashboard, our primer on what a CTO means in business explains how modern technical leaders balance innovation, risk, and cost control — exactly the levers AI governance depends on.

As a result, budgets remain transparent and aligned with organizational goals, fostering responsible resource management.

Using ChatGPT for early insights and Copilot for hands-on automation has cut our time from requirement to release by about 40%. It has also improved our engineering quality.

Now, our process is faster without being reckless. We have fewer hand-offs, clearer stories, cleaner code, and audit documents that meet the most thorough regulations.

We frequently remind clients that Ruby on Rails is perfect for SaaS and review the best Ruby on Rails hosting providers whenever we spin up new pipelines!

Integration into the QA Workflow

We weave generative AI and code assistants through five pivotal moments in our delivery pipeline, turning QA from a final gate into a continuous source of insight and speed.

Requirements Analysis – spotting ambiguity early

During backlog refinement, ChatGPT reviews each user story and points out unclear phrases. Words like “fast” or “robust” may seem clear until tested.

Sprint Planning – drafting cleaner user stories

Large-language models now propose INVEST-compliant stories complete with first-pass effort tags. The product owners still make the call but accept roughly four out of five suggestions without changes, cutting average planning time per story from 18 to 7 minutes.

Test Design – generating balanced case suites

The LLM combines domain embeddings with past defect patterns to suggest a variety of examples, including positive, negative, and edge cases.

Engineers curate the list, not replace it. And we’ve seen about a 30% drop in design and execution effort since moving to this model.

Automation Coding – letting Copilot handle the boilerplate

When we create new APIs or performance suites, GitHub Copilot helps by writing most of the basic code, like HTTP wrappers, data builders, and load-test setups.

This allows engineers to concentrate on the more complex parts. Our studies show that teams complete these tasks about 55% faster, and pull requests are finished in half the usual time.

CI Feedback Loop – learning from every test run

After tests finish, an agent condenses the noisy logs into structured events and streams them into our Elastic (ELK) stack.

Elastic’s built-in machine-learning jobs establish baselines for log volume and message patterns, flagging anomalies in minutes instead of hours and shrinking mean-time-to-diagnosis for flaky tests or environment drift.

Since adopting this AI-enabled workflow, we have cut our defect-detection cycle by 22%, expanded coverage by 25%, and built a self-learning quality pipeline that grows effortlessly with product complexity.

Automated Tasks and Efficiency Gains

In the last two quarters, our generative-AI toolkit has taken over most of the routine tasks in Quality Engineering.

It now handles reading specifications, turning them into detailed user stories, creating test matrices for CRUD operations, and generating predictable data for load tests.

- Requirement analysis lead time shrank by 66 %, falling from three working days to a single day, which now aligns neatly with our sprint-planning cadence.

- AI-assisted test-case ideation throughput improved by 50–70 %, enabling the team to author depth-oriented negative cases that would have been deprioritized in the past.

- API-test coverage that once took a whole work week is now achieved within hours, thanks to large-language-model (LLM) pattern mining.

We apply AIQA on web and mobile products, with particular depth in our HIPAA-compliant healthcare suite.

We have integrated these practices into our daily operations, which has enhanced our processes and produced measurable outcomes. Gartner projects that by 2025, 30 % of enterprises will have instituted comparable AI-augmented dev-and-test strategies, up from <5 % in 2021.

Benefits and ROI of AI in QA

Finance stakeholders track two macro metrics — speed, stability, and labor redeployment:

- Release-prep lead time is down 20–30 %, accelerating the entire CI/CD pipeline without compromising gate quality.

- Engineers use their time better. They now spend about 45% of their work on testing new ideas and improving how the product feels to users, instead of just 20% before. This helps them learn more about the product without needing more team members.

Zooming out, McKinsey estimates that AI could inject $2.6–4.4 trillion of economic value annually, much of it tied to software development productivity gains — evidence that our localized wins are a much larger trend.

AI in QA Case Study Example

In 2024, a team worked on an oncology data portal project with multiple institutions involved. They needed to review over 300 requirements pages, which usually took three analysts an entire sprint to break down.

Instead, our customized version of ChatGPT produced 127 user stories with acceptance criteria in under four hours. The team found four conflicting chemotherapy dosage rules during the process, preventing 6 to 8 potential defects and saving about 15% of the project budget.

We used the same tool for sprint planning to generate numerous Gherkin scenarios. The QA lead accepted most of them in just two days, a task that previously took a week with three people, resulting in a faster cycle time and enabling earlier exploratory testing.

These results are consistent with industry trends:

Key challenges and limitations when introducing AI into QA

Integrating AI into quality assurance can bring significant benefits but creates challenges that teams must manage — from skill gaps to compliance issues.

Let’s see the five main challenges and discover practical solutions for successful AI use.

#1. Learning to “speak AI”

Even experienced test engineers need a few sprints to learn effective prompting. A shared prompt guide and support from an “AI steward” can help the team learn quickly.

#2. Protecting sensitive data

When key confidential information must stay on-site, all model runs and prompts must use company hardware. This setup slows down operations by about 25% compared to cloud solutions, but it satisfies compliance requirements.

#3. Keeping results consistent

Large language models often provide different answers. To manage this, many teams use an “AI-draft, human-final” method: the model creates a draft, a reviewer saves it in Git, and future drafts are compared to the approved version.

#4. Fragile end-to-end tests

Vision-language models have difficulty with dynamic CSS selectors, Shadow DOM elements, and changing component IDs, causing a 30% locator churn.

For critical tasks, we need to create manual page objects. AI-generated locators work best for low-risk smoke tests that are updated with UI changes.

#5. Limited domain insight

Generic models often miss key details in specialized workflows and ignore rare situations. They make basic claims without proper adjustments. While providing relevant documents can improve accuracy, it also needs regular updates.

Team Perspectives and Future Outlook

Will AI eventually replace manual testing, or will it remain a support tool?

Artificial Intelligence is redefining the QA landscape, but full replacement of manual testing remains out of reach, especially for safety-critical or UX-sensitive systems.

Yet, human testers excel in empathy, creativity, and exploratory testing, while AI handles routine tasks. This enables QA engineers to focus on product quality and user value.

JetRuby Roadmap for AI in QA and How We See the Field Evolving

JetRuby is transforming quality assurance with AI to combine intelligent systems with human skills better. Our roadmap reimagines QA as a key part of success.

We develop tools that help testers spend less time on repetitive tasks, so they can work more on inclusive, user-friendly digital experiences.

Here’s how we see AI reshaping quality assurance today, tomorrow, and beyond.

Universal adoption across projects

We are scaling our AI test-suite orchestration platform to every delivery team so that predictive defect detection and self-healing pipelines become the default, not the exception.

Domain-specific models for healthcare

Because medical workflows demand near-zero tolerance for error, we fine-tune large-language and vision models with curated clinical datasets to catch edge-case failures that generic AI overlooks.

Automated visual & accessibility testing

We train computer vision networks to spot layout drift, color-contrast violations, and screen-reader issues in milliseconds, giving us immediate feedback on inclusivity and brand consistency.

Internal QA AI-assistant

A conversational agent (currently awaiting client API access) will ingest build artifacts, test results, and product specs, then answer “why did this fail?” or “what should I test next?” in plain language.

Looking 2–3 years ahead

AI will sit beside every tester as a real-time co-pilot, eliminating rote setup, environment wrangling, and redundant execution.

The QA engineer’s centre of gravity will shift toward holistic product quality and user experience stewardship, ensuring the right problems are solved, not just that the code works as written.

The future of testing is not about choosing between AI and manual testing.

Instead, it is about using both together in symbiosis. We can let machines handle routine checks, while humans focus on improving quality in a way that centers on the user.

AIQA changed our QA process into a helpful partner for product and engineering.

Seeking strategic technical leadership beyond QA?

JetRuby’s CTO Co-Pilot helps your organization by connecting you with experienced architects and specialists in various fields.

They solve complex architectural and infrastructure problems, support the use of new technologies, and back every suggestion with thorough research and validation.

Get quick access to top experts without the delays and costs of traditional hiring.

Schedule a free discovery call or message us through our Contact Us form.