AI Test Automation: Using AI to Generate Unit Tests Automatically

Learn how AI test automation speeds up unit test generation, raises coverage, and cuts release time. See examples, tools, and JetRuby expertise.

Table of Contents

Fixing defects post-release costs 30× more than catching them in unit tests, yet teams often delay testing due to the tedium of the manual unit tests.

Unit tests are quick, focused checks for behaviors like handling negative numbers, invalid inputs (“abc”), or large values.

Successful tests build trust in systems.

However, manual test creation consumes a third of sprint time, invites errors, raises costs, produces unreliable test results due to human error, and lowers morale.

As systems scale, this manual process becomes ineffective and can potentially lead to employee turnover, should a business ignore innovations like AI unit test generation.

So, instead of fearing AI taking their jobs, forward-thinking engineers use AI test automation to reduce manual efforts. Not to mention various routines that AI can optimize.

AI automation elevates coverage and reliability, integrates into CI/CD pipelines, and offers measurable quality gains, helping teams escape “quality quicksand” while aligning testing with developer efficiency goals.

In this article, we’ll look at how to create unit tests with AI and counter manual testing pitfalls.

Key Takeaways

- AI unit tests help find quality issues earlier, speeding up feedback and making releases faster.

- Automated tools can achieve broad, mutation-tested coverage in minutes, freeing engineers for high-value design work.

- The most effective strategy pairs AI’s speed with developer insight — using generated suites as a starting point, not a replacement.

- Treat AI output like regular code — review it, version it, and measure it to avoid complacency and keep trust.

- Fewer maintenance hours and earlier bug detection lead to real cost savings and quicker time-to-market.

AI Unit Tests: How It Works

Think of an AI assistant as a tireless quality-assurance engineer who surveys an entire codebase and drafts tests within seconds.

Large-language models (LLMs) such as GPT-4-Turbo, CodeWhisperer, and Copilot have analyzed billions of open-source projects.

From this training, they know, for example, that a function named calculateShippingCost should never return a negative value and that an overnight option typically increases the price.

The assistant’s workflow unfolds in three passes:

- Static inspection parses each function’s signature and control-flow graph, noting inputs, branches, and documented constraints.

- Semantic reasoning processes docstrings, inline comments, and identifier names to infer normal and boundary behaviour.

- Test synthesis applies to every discovered execution path. It fabricates representative inputs, predicts outputs, and renders assertions in the project’s preferred framework, such as RSpec for Ruby, Jest for Node.js, JUnit, or Kotest for Kotlin. This way, continuous-integration pipelines require no alteration.

The practical payoff is coverage of edge conditions that human authors often overlook, raising defect detection before a feature reaches production.

How AI Understands Your Code to Generate Tests

LLMs recognise programming patterns much as linguists recognise grammar: by exposure to massive corpora. (If you want a deeper dive into the data-side mechanics, JetRuby’s primer on understanding AI training data lays it out clearly.)

Pattern recognition

Decades of public repositories teach the model that a parameter named weightKg must be positive, that lists may be empty, and that network requests can time out.

Contextual clues

Natural-language comments such as “weight must be > 0” tell the model to include a failure scenario where weight equals zero.

Branch-coverage heuristics

Advanced tools, including our own plugin deployed at Acme Tech, blend static analysis with AI suggestions. The combined engine refuses to stop until each conditional branch, guard clause, and exception path is exercised.

A routine that processes a list will spur the assistant to propose at least three scenarios: an empty list, a typical populated list, and a list containing malformed values.

The resulting assertions confirm the method returns expected results, throws the correct exceptions, or logs the appropriate warnings.

Check these 15 alternative app stores for mobile apps if you need to expand your digital footprint or test your mobile app development idea early on.

From Code to Test: Unit Test Example

Imagine the following flow performed inside Visual Studio Code with our “Generate Test” command:

The routine under scrutiny calculates shipping cost based on weight, distance, and delivery speed. A guard clause throws an error if the weight is not positive.

A developer selects the function and creates a prompt for the assistant: “Draft unit tests covering normal, premium, and error cases.”

Within seconds, the AI assistant proposes three tests:

- Standard shipment — ten kilograms over one hundred kilometres by regular service returns the base price plus incremental fees.

- Overnight premium — the same inputs with overnight speed include a surcharge, validating the premium branch.

- Invalid weight — a zero-kilogram request triggers the function’s error path and confirms the correct exception message.

Each test arrives formatted for Jest, complete with descriptive names, setup, execution, and assertions.

In practice, we commit these files alongside developer-written tests. Continuous integration runs them without further configuration.

Benefits of AI Unit Test Generation: Speed, Coverage, and Confidence

Artificial Intelligence made unit test generation faster and easier. Instead of spending time on manual testing, developers can now use AI tools to generate tests in just minutes by learning from large codebases.

This change offers four key benefits:

- Automated generation helps developers spend less time writing repetitive code, so they can focus more on improving core features.

- AI helps identify tests for unusual cases and potential problems people might miss. This improves overall coverage and protects complex logic.

- AI-generated tests follow standard industry practices, which reduces the chance of human mistakes, improves quality, and maintains a consistent structure as the code changes over time.

- Quick testing and early bug detection speed up delivery times, lower fixing costs, and build trust with customers who face fewer issues in production.

For Developers: Faster Test Creation and Better Coverage

AI-powered testing offers businesses key benefits like speed, thoroughness, and reliability. These advantages create a solid base for success, but the real impact is seen in the everyday work of developers.

- When routine assertions are written in seconds, engineers can focus on creative work and channel effort into architecture, performance tuning, and innovative problem-solving, not boilerplate tuning.

- AI detects edge cases early, suggesting scenarios such as boundary values, malformed inputs, or intermittent network failures that might otherwise surface only after deployment.

- Reviewer-friendly tests move faster, and technical debt declines because generated suites follow consistent naming, setup, and teardown conventions.

- Developers have greater confidence in their daily work because they know that a wider spectrum of paths is coveredЬа. It lets them refactor boldly, reducing stress and enhancing job satisfaction.

For Business Stakeholders: Faster Delivery and Higher Quality

While developers see immediate improvements in their productivity and code quality, these technical benefits lead to real business outcomes.

AI-powered testing makes workflows more efficient, changing how teams work together and companies compete.

By helping engineers innovate more quickly and accurately, organizations can gain strategic advantages:

- Automated unit test generation cuts weeks from timelines, shortening release cycles and accelerating market entry.

- Pre-deployment bug detection reduces defect expenses (up to 10x cheaper than post-launch fixes), safeguarding budgets and reputation.

- Stronger customer loyalty – Reliable software ensures stronger customer loyalty, reduces crisis management, and boosts user satisfaction and NPS scores.

- Proven innovation – We at JetRuby leverage AI testing to showcase technical leadership, securing contracts with guarantees of speed and measurable quality gains.

Traditional testing depends on human skills, but even the best engineers can miss unusual edge cases in modern systems.

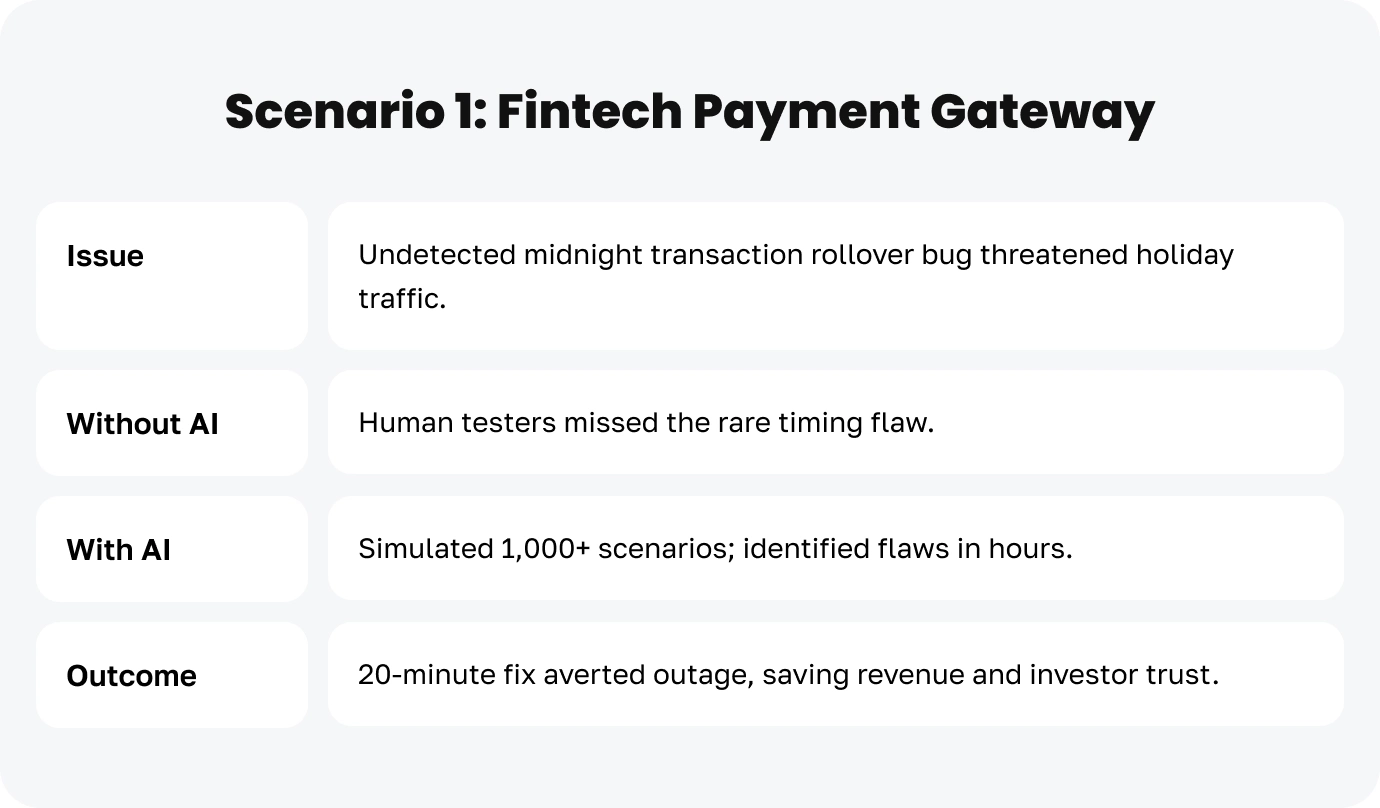

For example, what happens when a midnight timestamp conflict crashes a payment system? Or when a weather app fails to handle subzero temperatures, frustrating users in cold climates?

This is where AI unit testing steps in and analyzes code behavior, simulates unpredictable scenarios, and flags risks that evade manual checks.

Here, AI acts like a detail-obsessed co-pilot. It designs tests, uncovering gaps in logic, timing anomalies, or data quirks that humans might overlook.

The result?

Quicker software releases, fewer urgent fixes, and more reliable systems that can handle surprises.

Let’s look at Scenario 1:

And Scenario 2:

The outcome?

While human experts set the direction, AI works alongside them to identify risks that often go unnoticed.

This collaboration is especially powerful when teams combine AI tools like GitHub Copilot to generate unit tests with foundational knowledge of how to write unit tests manually. This strategy leverages both automation and human precision.

Popular AI Tools for Generating Unit Tests

AI has matured from “nice-to-have” to “must-have” in modern quality pipelines.

Many unit test generators are available now that can create entire test suites on demand, integrate directly with IDEs, and meet corporate privacy rules.

The options listed below include the standard technology stacks used by JetRuby — Ruby on Rails (perfect for SaaS), Node/React, and Java/Kotlin microservices.

GitHub Copilot & Other AI Code Assistants

GitHub Copilot test generation remains the reference point for real-time AI pair-programming.

Inside VS Code or JetBrains IDEs, it proposes test functions the moment a developer writes a test name or a comment, sparing teams from boilerplate.

The new GitHub Copilot unit tests chat command and Agent mode re-generate failing tests automatically, shortening feedback loops.

For organisations that need alternatives or additional privacy modes, Amazon CodeWhisperer and Tabnine perform similar inline completions.

CodeWhisperer’s enterprise tier runs on-premises with SSO and suggests RSpec, JUnit or Jest tests for Ruby, Java, JavaScript, Python, and Kotlin code.

Tabnine added dedicated “AI test agent” functionality that drafts thorough test plans and cases across 15+ languages, all while keeping code private.

Together, these assistants make AI unit test generation a natural part of everyday coding rather than a separate phase.

Diffblue Cover (Java/Kotlin)

Diffblue Cover specialises in automatically writing JUnit tests for any JVM codebase. Its static-analysis engine explores each method path, then emits assertion-rich tests that routinely expose edge-case defects.

The April 2025 release introduced support for composite primary keys in Spring Data JPA, REST endpoints for coverage metrics, and a revamped UI plug-in, illustrating sustained R&D investment and enterprise readiness.

Ponicode (Test Generation for JavaScript & Python)

Initially a Paris start-up, Ponicode offered a VS Code extension that generated Jest or PyTest suites with a single click. CircleCI acquired the technology and embedded it straight into the CI/CD pipeline.

Hence, tests appear automatically in pull requests, perfect for Node.js or React components that dominate front-end work at JetRuby. The acquisition signals the strategic value CI vendors see in AI-assisted quality.

ChatGPT & GPT-Based Assistants

General-purpose LLMs such as ChatGPT (GPT-4 & successors) remain a flexible fallback: paste a Rails model or describe a Kotlin service, and the assistant returns plausible RSpec or Spek tests.

This model-agnostic freedom is ideal for early brainstorming or for edge cases that specialized tools overlook.

However, your team must evaluate confidentiality. Sensitive repositories should route prompts through private deployments or secure VPN endpoints.

Other Noteworthy Tools

There are a few other AI automation tools worth mentioning.

Codeium/Qodo

Free IDE plug-in that analyses full files and proposes intent-based tests for Python, JavaScript/TypeScript, and Java. It’s now evolving into an agentic “quality flow” platform.

TestGPT (open source)

CLI that calls OpenAI or local LLMs to auto-author tests; popular for projects where vendor lock-in is a concern.

Amazon CodeWhisperer (Enterprise tier)

Mentioned above, notable for its SSO, on-prem mode, and AWS console integration.

Tabnine

Covered above; adds protected-model variants for regulated industries.

Collectively, their adoption curves show that AI-generated testing has progressed from prototype to mainstream.

StarCoder 2, Code Llama 70 B-Instruct, and DeepSeek-Coder 33B

Recently open-weight code LLMs such as StarCoder 2, Code Llama 70 B-Instruct, and DeepSeek-Coder 33B have jumped from hobby projects to mainstream dev tooling in 2024-25.

GitLab, Mozilla AI, and infosec researchers all report a surge of regulated-sector firms swapping SaaS Copilots for on-prem installs to meet tighter GDPR / EU AI Act rules.

Why everyone is going self-hosted

- Privacy pressure – GitLab’s Duo Self-Hosted launch notes public-sector, finance, and healthcare customers choosing on-prem for data-residency control.

- Security wake-up call – A March 2025 Pillar Security paper shows 97 % of enterprise devs use AI assistants and details a Copilot supply-chain exploit, pushing CISOs toward local gateways.

- Regulatory squeeze – The EU AI Act now forces open-source LLMs to publish training-data summaries, sharpening audit expectations for European deployments.

- Operational control – Mozilla.ai highlights that owning the weights lets organisations pick the latency, price-per-token, and audit depth they need.

| StarCoder 2 | 3 B & 7 B on single RTX 4090; 15 B needs ≈ 80 GB VRAM | 16 k context, Apache-2, 600 + langs | 15 B ≈ $0.40 / 1 M tok power + depr.; no native instruction tuning |

| Code Llama 70B-Instruct | 2×H100 80 GB or 4×A100 40 GB | Top zero-shot accuracy, chat + infilling | Heavy (≈ $2.4 / 1 M tok); Llama licence forbids weight resale |

| DeepSeek-Coder 33B | 2×A100 80 GB; needs > 66 GB VRAM un-quantised | Built-in repo-RAG, bilingual (EN/ZH) | Latency; mixed licence; quantisation can hurt syntax |

Shared upsides — full data sovereignty, custom fine-tuning/RAG, stable latency.

Shared downsides — GPU cap-ex, MLOps overhead, and a steep skill curve.

How to stop code & PII leakage (GDPR/AI-Act traceability)

- Self-hosted LLM Gateway – central auth, rate-limit, write-ahead WORM logs (Envoy AI Gateway, Gloo, TGI + policy hooks).

- Zero-trust prompt-proxy – mTLS, per-user SAC, secret/PII regex deny-lists; real-time redaction before the model sees a byte.

- IDE mask-filters – plugin strips blocks tagged // NOAI, redacts API keys (AKIA…→***AWS-KEY***), sends only signatures + TODO, fulfilling GDPR data-minimisation.

- End-to-end UUID trace – request ID in header → Gateway DB → proxy log → SIEM dashboard, stored 24 months.

- Red-team inference drills – attack with “repeat last 200 tokens”; proxy must block, Gateway logs, SOC alerted – proving the guard-rail chain works.

With this Gateway → proxy → IDE masking stack, enterprises enjoy the speed of StarCoder 2, Code Llama 70B, or DeepSeek-Coder 33B while keeping every proprietary line safely inside the perimeter.

Build or Buy? What are the reasons behind the choice to develop a custom ERP system?

AI Test Work in Practice

AI-driven testing provides faster results and broader coverage, but we need a smart mix of new ideas and practical steps to fully benefit from it.

Success depends on the test generation software we use and how well we integrate it into our processes.

Start Small, Then Scale

Pilot the AI on a non-critical module (e.g., a contact form) before rolling it out to core services.

Treat the AI as a Junior Colleague

Review, refine, and teach the generated tests. If a rule such as “Discounts cannot exceed 50 %” is missed, add it explicitly.

Protect Intellectual Property

Use Copilot for Business, on-prem CodeWhisperer, or Tabnine Protected to avoid external leakage, and consult our roundup of the 8 best Ruby on Rails hosting providers to keep deployment friction-free.

Automate Maintenance

Wire test generation into CI/CD so every commit refreshes tests. For example, GitHub’s own analysis of enterprise repositories shows that developers wrote 13.6% more error-free lines for each defect when using Copilot.

In a 2025 paper on arXiv, researchers introduced an updated version of GitHub Copilot that acts as an independent testing agent. This new tool can find bugs more accurately, improving bug-detection accuracy by 31.2%. It also increases critical-test coverage by 12.6%, showing how automation can enhance software testing.

Invest in People & Process

JetRuby’s personalized development plans help developers trust and extend AI suggestions, smoothing cultural adoption. Our hiring process for top engineers offers the inside scoop for applicants wondering how we hire the talent behind those plans.

With the right blend of these tools and practices, AI-assisted unit testing can compress schedules, raise coverage, and ultimately boost delivery confidence across Ruby, JavaScript, and Kotlin projects alike.

Ever heard of a Game-Changing Omakase Experience referring to software development? Here’s your chance to learn more about what’s new in Rails 8 upgrade!

Making AI Tests Effective: Best Practices

AI-generated testing is changing software development quickly. However, to use test generation software well, teams should balance automation and careful review while closely following project goals.

Key principles for using AI in testing workflows include:

- Keep humans in the loop. Every AI-generated test should pass a developer review to verify relevance, adjust assumptions, and prevent false confidence.

- Integrate AI deeply, not sparingly. Invoke generation while authoring new features and let the pipeline request fresh tests whenever code evolves. Continuous integration keeps coverage current.

- Improve, don’t just approve. Developers manage exceptional cases and enhance checks, while AI handles simple tasks, allowing experts to focus on depth and accuracy.

- Safeguard proprietary code. When confidentiality matters, opt for enterprise-grade services such as GitHub Copilot for Business or on-premises large-language-model deployments so stakeholders know IP remains protected.

- Invest in prompt craftsmanship. Clear function descriptions, thoughtful comments, and incremental feedback help AI learn project conventions, elevating test quality sprint after sprint.

Following these guidelines ensures both engineering and business teams extract maximum value while mitigating typical adoption risks.

The Future of Unit Testing with AI: How JetRuby Can Help You Integrate Your AI Test Initiatives

AI-driven quality is already reshaping software delivery. Yet frameworks, data policies, and organizational culture differ from one company to the next.

Jetruby’s CTO Co-Pilot helps companies solve problems and, therefore, grow faster.

We provide expert support, training, and flexible collaboration, allowing your CTO and team to achieve great results while reducing risks and costs.

JetRuby’s CTO Co-Pilot bridges the gaps:

- We can create an AI solution from scratch or integrate tailored AI tools that align with your specific tech stack.

- Security first is one of our core pillars. Our experts design deployments that respect compliance mandates and keep sensitive code on secure ground.

- A battle-tested expertise backs us — We’ve completed over 250 successful projects for clients like OSRAM Farming, Backbase, and GoPro. Our Ruby Gems have 40 million downloads. Using AI, we speed up development by 50% while ensuring quality, following ISO standards.

- Strategic leadership – Not sure what a CTO is and what it actually does? Our primer on what a CTO means in business clarifies how technical vision turns into delivery value.

If late-night debugging and creeping release dates sound familiar, let’s change the narrative.

You became a developer to create, not to babysit tests.

Contact us to seamlessly integrate AI test automation into your existing systems and let AI handle manual repetitive tasks.