Mobile App Development With AI vs. Traditional Approach

Discover how AI transforms mobile app development — from faster prototyping and smarter code generation to real-time analytics and automated testing. Compare it head-to-head with traditional methods and learn how JetRuby blends AI with proven engineering for faster, more flexible digital products.

Table of Contents

Mobile app development keeps moving at break-neck speed. The classic life cycle — discovery, design, coding, QA, deployment — still delivers, but anyone who has shipped even a modest feature set knows how slow, rigid, and expensive that sequence can become. Because every stage happens in order and by human hand, deadlines slide, requirements morph, specialists wait on one another, and costs mount. Overruns feel inevitable, and product teams often reach the finish line with something the market has already out-grown.

Over the past two years, generative AI has shifted the conversation. What began as clever demos that could tweak a screen mock-up or write a few lines of code has matured into a practical toolkit that transforms not only the apps we hold on our phones but the way we build them in the first place. Modern tools sketch wireframes, estimate effort, write boilerplate, predict bugs, and surface real-time usage insights — sometimes before a human even asks. Schedules that once spanned quarters can now compress into a handful of sprints without cutting corners on quality.

Consider the typical scenario inside an SMB that has just raised a round or secured a strategic budget line. Product leadership drafts an ambitious roadmap; marketing wants something flashy before the next trade show; finance expects iron-clad projections. Everyone agrees on the vision, yet three months later the first clickable build is still a Figma prototype, backend schemas are half-drafted, and the burn rate begins to bite. Seasoned founders recognize the pattern: the requirements treadmill keeps spinning while value to users remains out of reach. AI changes that math by handing repetitive chores to machines and letting humans focus on decisions that matter.

In this article, we put AI-powered mobile app development side by side with the traditional approach. Our aim is simple: give SMB decision-makers a clear, experience-backed view of what changes, what stays the same, and where the biggest wins lie. Along the way we’ll show how JetRuby weaves AI into our proven engineering practices to deliver faster, smarter, and more adaptable digital products.

Traditional Mobile App Development: A Quick Recap

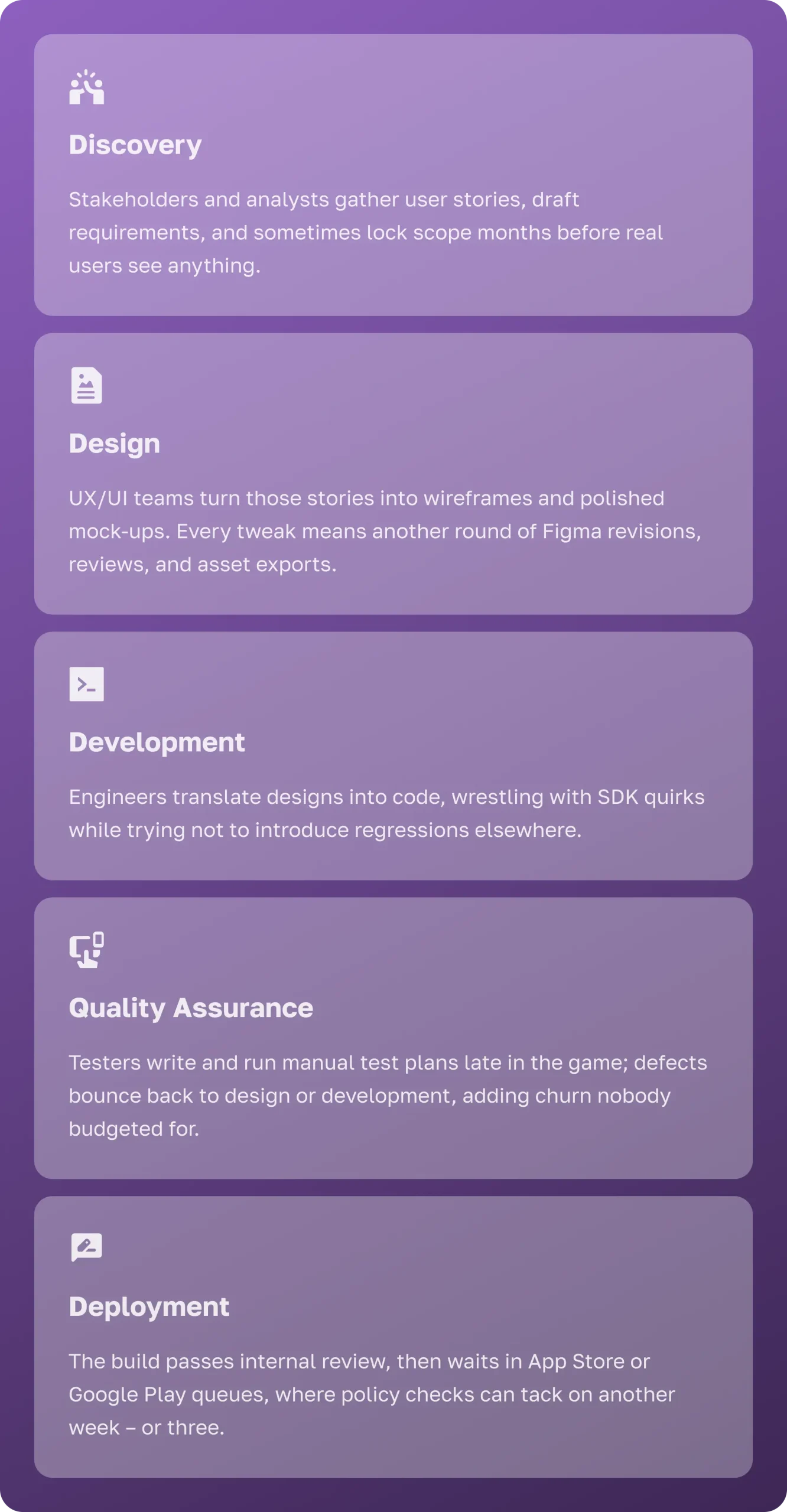

Most mobile projects still open with a familiar, step-by-step routine:

That linear flow feels orderly, yet hides several pain points. Feedback loops grow long; a decision made in discovery may not meet a user until QA, months later. Because estimates rely on expert judgment and historical analogy, uncertainty balloons. Studies show two-thirds of large software projects still overshoot budgets or miss deadlines, and SMB initiatives fare only marginally better.

So why does this waterfall-shaped conveyor belt survive? First, it feels safe: managers can track progress as a march of completed phases, even if user value appears only at the end. Second, legacy procurement models pay vendors per milestone, encouraging chunky deliveries rather than continuous flow. Third, many teams lack test-automation infrastructure and therefore avoid incremental releases. The cumulative tax is a rigid culture where change equals risk, and risk means delay.

Hand-offs exacerbate the drag. Every transfer — designer to developer, developer to tester — invites miscommunication and erodes accountability. Even agile rituals cannot fully erase the latency because any significant change requires repeating manual steps. In short, traditional mobile development sacrifices speed and flexibility for the comfort of a well-charted but rigid map.

Mobile App Development With AI: What Changes

Modern AI tools are reshaping each phase of app development, injecting automation and intelligence into the pipeline. In practice, this looks like an AI-augmented workflow from day one.

AI-Assisted Prototyping

Instead of beginning with blank artboards, teams feed a natural-language prompt — “Create a dark-mode login screen with email, password, and social sign-on buttons” — into a design generator. Within minutes they get clickable layouts that respect platform guidelines. Early testers poke holes, and designers refine details rather than redraw from scratch. An internal benchmark cut a two-hour manual concept to twenty-five minutes of guided prompting and polish.

AI in Planning & Estimation

Machine-learning models trained on past sprints, team velocities, and complexity scores forecast effort with surprising accuracy. They highlight risk hot spots long before blockers appear on a burndown chart. As real progress data streams in, estimates adjust automatically, letting project leads shift resources days — not weeks — before a deadline wobbles.

AI Code Generation

Large language models now speak fluent Swift, Kotlin, React Native, and Flutter. Ask for a token-based authentication module, and an assistant scaffolds networking calls, local storage, error states, and unit tests in seconds. Engineers still review and refine, but their time goes to architecture and edge cases instead of boilerplate. McKinsey pegs the time savings at roughly forty percent, and our own logs echo that range.

Intelligent QA & Testing

Test creation moves left. AI spiders through the repository, maps dependencies, and proposes unit, integration, and UI tests — complete with assertions and mock data. During CI runs, anomaly-detection models flag flaky tests and rare crashes that manual smoke passes miss. Self-healing scripts keep tests from breaking when element IDs shift, so QA focuses on exploratory work instead of babysitting automation.

AI-Powered Analytics

Launch no longer marks the end of development; it starts a feedback fly-wheel. Embedded ML watches usage patterns, predicts churn, clusters behavior, and suggests A/B variants almost in real time. Product owners answer “What should we build next?” with evidence, not instinct. Roadmaps compress from six-month epics into fortnightly increments guided by live data.

Cultural Lift, Not Just Tooling

Adopting AI reshapes team culture. Designers think in high-frequency loops because prompts yield assets in minutes. Developers treat AI suggestions like pair-programming sessions, creating a steady hum of peer learning. Product managers spend less time chasing status updates and more time turning analytics into roadmap pivots. The entire team moves from phase gate to flow.

Real Life Case

A regional retail chain asked JetRuby to rebuild its loyalty app on a tight twelve-week deadline. By plugging AI prototyping into sprint one, we produced three design directions in a single afternoon workshop and validated them with thirty beta customers by week two. AI code generators built the onboarding flow and coupon-engine stubs while engineers implemented business rules. Automated tests caught an edge-case pricing bug during CI, saving a likely two-day hotfix cycle. The client launched four weeks early and has since doubled monthly active users.

These enhancements — rapid prototyping, smarter estimation, generated code, automated QA, and always-on analytics — represent the practical benefits of AI in app development. They trim cycle time, pare back cost, and let teams follow user needs rather than paper plans.

Head-to-Head Comparison: AI vs Traditional

When comparing AI mobile app development vs traditional approaches, the contrasts in speed, adaptability, and budget discipline are impossible to ignore.

When comparing AI mobile app development vs traditional approaches, the contrasts in speed, adaptability, and budget discipline are impossible to ignore.

- Time to MVP – Classic cycles often need four to six months for a usable build; AI-accelerated teams pare that to two or three.

- Flexibility – Traditional projects lock scope early because change is expensive. In an AI workflow a prompt refreshes screens or data models, so mid-course corrections land in days.

- QA & Testing – Manual plans run late and shallow; AI embeds test generation at commit time and watches logs for anomalies, lowering change-failure rates.

- Cost of Changes – Late tweaks once triggered redraws, rewrites, and full regressions. AI slashes that overhead — analysts report up to thirty-percent lower development costs.

- Innovation Velocity – Freed from boilerplate, teams experiment with AR try-ons, predictive search, or voice commands without derailing the roadmap.

Security & Compliance – Traditional teams manually audit each change for GDPR, PCI-DSS, or HIPAA. AI-driven analyzers now scan commits against rulesets instantly, cutting review cycles from days to hours.

Where JetRuby Fits In

JetRuby balances two commitments: uphold the engineering rigor clients expect and harness AI speed gains wherever they pay off.

- Prototyping in Hours, Not Days – AI design generators absorb your brand guidelines, color palettes, and user-story maps, then output clickable wireframes before the first sprint planning session wraps. Because layouts arrive so early, stakeholders can walk through the flow, flag friction points, and request tweaks the same day. That feedback loops straight into the generator, producing revised screens in minutes instead of the multi-day redraw cycle typical of manual tools.

- Code Generation With Oversight – Copilot-style assistants scaffold data models, API endpoints, and UI widgets as soon as tickets are groomed, sparing engineers from boilerplate work. Senior developers then audit the output line by line, refactor for performance and security, and weave the pieces into a coherent, maintainable architecture. The result is production-grade code that arrives faster without sacrificing craftsmanship or long-term stability.

- AI-Backed Quality Gates – Every pull request triggers an ML-driven test creator that maps code paths, generates unit and integration tests, and runs static analysis for security vulnerabilities. Issues surface while authors still have the context fresh in mind, slashing the time it takes to patch defects. Over successive sprints, this gate keeps technical debt low and bolsters release confidence.

- Live Analytics Loop – Post-launch dashboards stream real-time cohort, funnel, and performance metrics to the product team. When a feature underperforms, a simple prompt spins up experiment variants — new copy, flow tweaks, or UI changes — ready for A/B testing within hours. Data-backed insights replace guesswork, letting teams refine the app continuously instead of waiting for quarterly retros.

- Guided Adoption Workshops – In one-day intensives, JetRuby specialists sit side-by-side with your engineers to teach prompt engineering, model guardrails, and CI/CD pipeline integration. Participants leave with working code snippets, a reference prompt library, and a clear roadmap for embedding AI practices into their own workflows. This hands-on transfer accelerates internal adoption and builds lasting capability.

- Ethics & Governance Checks – Every model we deploy undergoes bias audits, privacy impact assessments, and compliance reviews against standards like GDPR and PCI-DSS. Automated scanners monitor data flows for policy violations, while human reviewers validate edge cases. These safeguards ensure AI acceleration never introduces hidden liabilities or reputational risk.

- Continuous Learning Loop – We log every prompt-and-result pair, along with performance outcomes, into a shared knowledge base. Over time, this repository highlights which prompts yield the best code quality, test coverage, or design fidelity. New projects start with proven recipes, steadily compounding the speed and accuracy gains across the entire portfolio.

This hybrid model trims weeks off MVP launches without sacrificing stability. AI is not a gimmick for us — it multiplies more than fifteen years of mobile engineering craft.

Summary

Bringing AI into mobile development changes the cadence of the craft. Traditional, hand-off-heavy cycles often stumble over budgets and timelines. AI-assisted workflows automate design, coding, testing, and insight gathering, trimming schedules by roughly a third and boosting quality. For SMB leaders deciding whether to pivot, the message is clear: faster releases, tighter cost control, and data-driven innovation are now within reach. JetRuby’s AI-enhanced approach delivers those gains today — faster builds, fewer surprises, and products that fit the market better.

The upshot is control. When tools accelerate drudge work, budgets stretch further and creative bandwidth grows. Teams test more hypotheses per sprint, spot failures sooner, and double-down on what works. That is a strategic advantage, not just an operational one.