The Future of Multimodal AI in Product Development: What to Expect in 2025 and Beyond

Discover how multimodal AI is transforming product development in 2025 — from faster prototyping to hyper-personalized UX.

Table of Contents

AI is no longer just about crunching numbers or spitting out text. Welcome to the era of multimodal AI, where machines can see, hear, speak, and even imagine. If you’re building products in 2025, this isn’t just another tech buzzword. It’s a seismic shift that will reshape how we design, develop, and deliver digital experiences.

In this article, we’ll explore how multimodal AI is transforming product development—from lightning-fast prototyping to hyper-personalized customer experiences. We’ll also show you how to capitalize on this trend to keep your product ahead of the curve.

Multimodal AI 101: What It Really Means

Traditional AI models are specialists — they handle text, images, or audio.

Multimodal AI breaks that boundary — it processes multiple data types simultaneously, combining vision, language, sound, and beyond.

Imagine ChatGPT that can read diagrams, summarize YouTube videos, analyze customer reviews, and respond to voice commands — all within a single interface.

Now imagine what that could unlock in your product pipeline.

Why Product Teams Can’t Afford to Ignore This

Whether you’re developing a SaaS platform, launching an eCommerce app, or iterating on your core product, multimodal AI offers transformative benefits:

Turbocharged Prototyping

Feed sketches, voice memos, and customer reviews into an AI system — and get a functioning app prototype.

Sounds futuristic? It’s already happening with tools like GPT-4o and Gemini.

Example: Design teams now generate UI mockups in minutes from hand-drawn wireframes and user feedback.

AI-Driven UX Testing

Multimodal AI can automatically analyze video recordings, heatmaps, and user feedback to flag usability issues.

The result? Faster, smarter iterations — with zero guesswork.

Next-Level Personalization

By blending voice commands, browsing history, and even facial cues, multimodal systems deliver hyper-personalized experiences at scale.

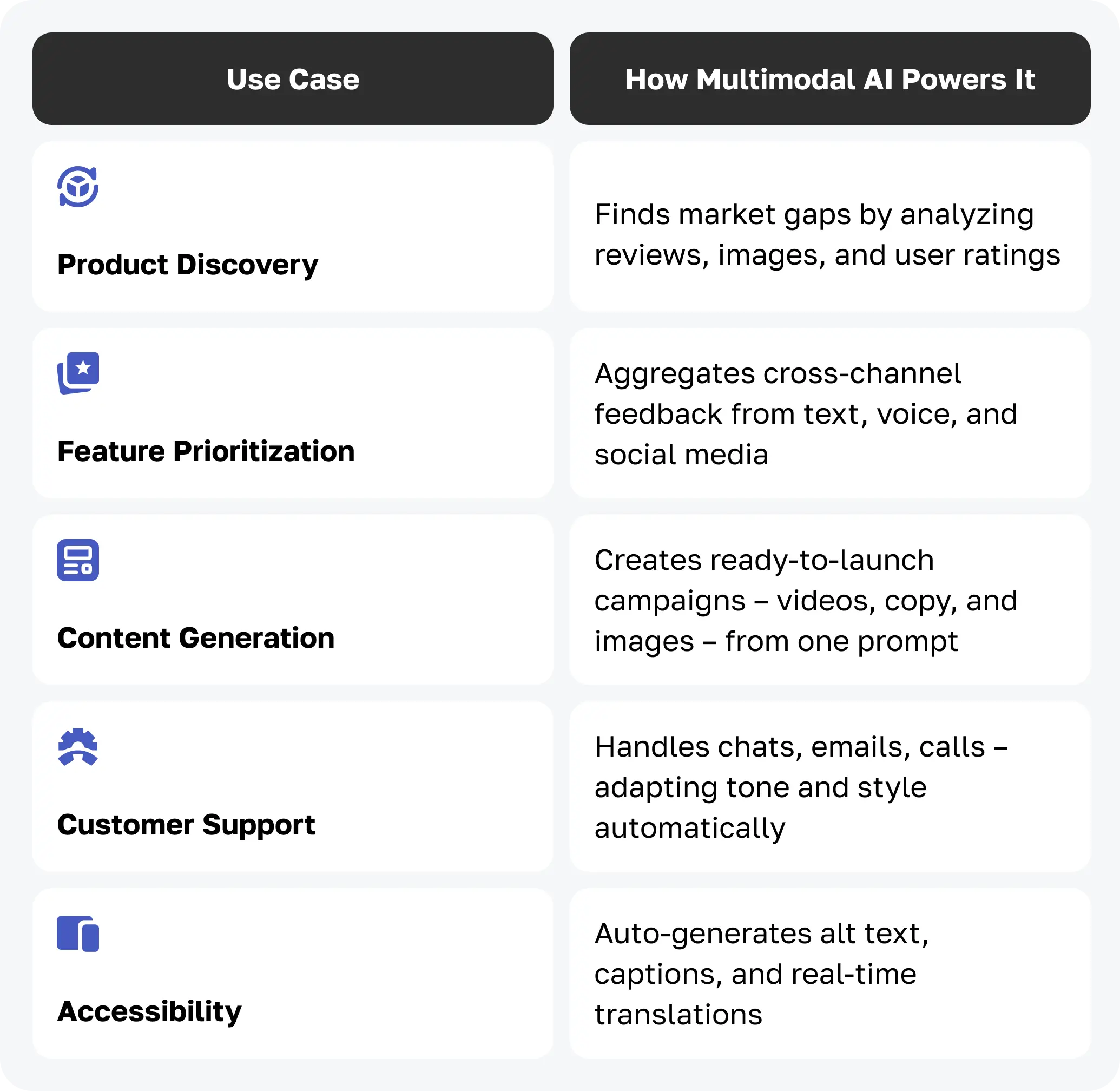

How Leading Teams Are Using Multimodal AI

Here’s where it gets practical. These are the hottest use cases right now:

Game-Changing Tools & Platforms to Watch in 2025

In 2025, several groundbreaking platforms will lead the charge in the multimodal AI revolution, offering powerful capabilities that are already transforming how teams build and scale products.

At the forefront is OpenAI GPT-4o, a next-generation model that seamlessly integrates text, images, and voice within a single interface. This unified approach allows product teams to process complex data inputs — whether analyzing visual content, responding to voice commands, or generating detailed written output — all without switching tools.

Another standout is Runway ML, a creative powerhouse that enables AI-driven video generation and editing. With Runway, users can craft high-quality videos simply by providing text prompts and images, making it a go-to platform for marketers, content creators, and product designers aiming to produce captivating media at scale.

For design teams, Figma’s AI tools are unlocking new levels of speed and flexibility. These tools can automatically generate user interface designs from spoken instructions or written prompts, dramatically accelerating prototyping and reducing the need for manual design iterations.

Another key player in this space is Perplexity AI, a cutting-edge research assistant that combines search, citations, and visual data to deliver highly relevant, nuanced insights. Whether conducting product research or gathering competitive intelligence, Perplexity helps streamline the process by surfacing credible sources and detailed analysis in seconds.

Here’s the best part: most of these tools now offer API access, meaning you can integrate multimodal AI functionality directly into your product workflows. This unlocks opportunities for automation, advanced personalization, and next-level innovation without starting from scratch.

Ready to leverage these tools?

If you’re serious about building AI-powered products, now is the time to act. Explore these platforms, experiment with their APIs, and discover how multimodal AI can supercharge your product development cycle.

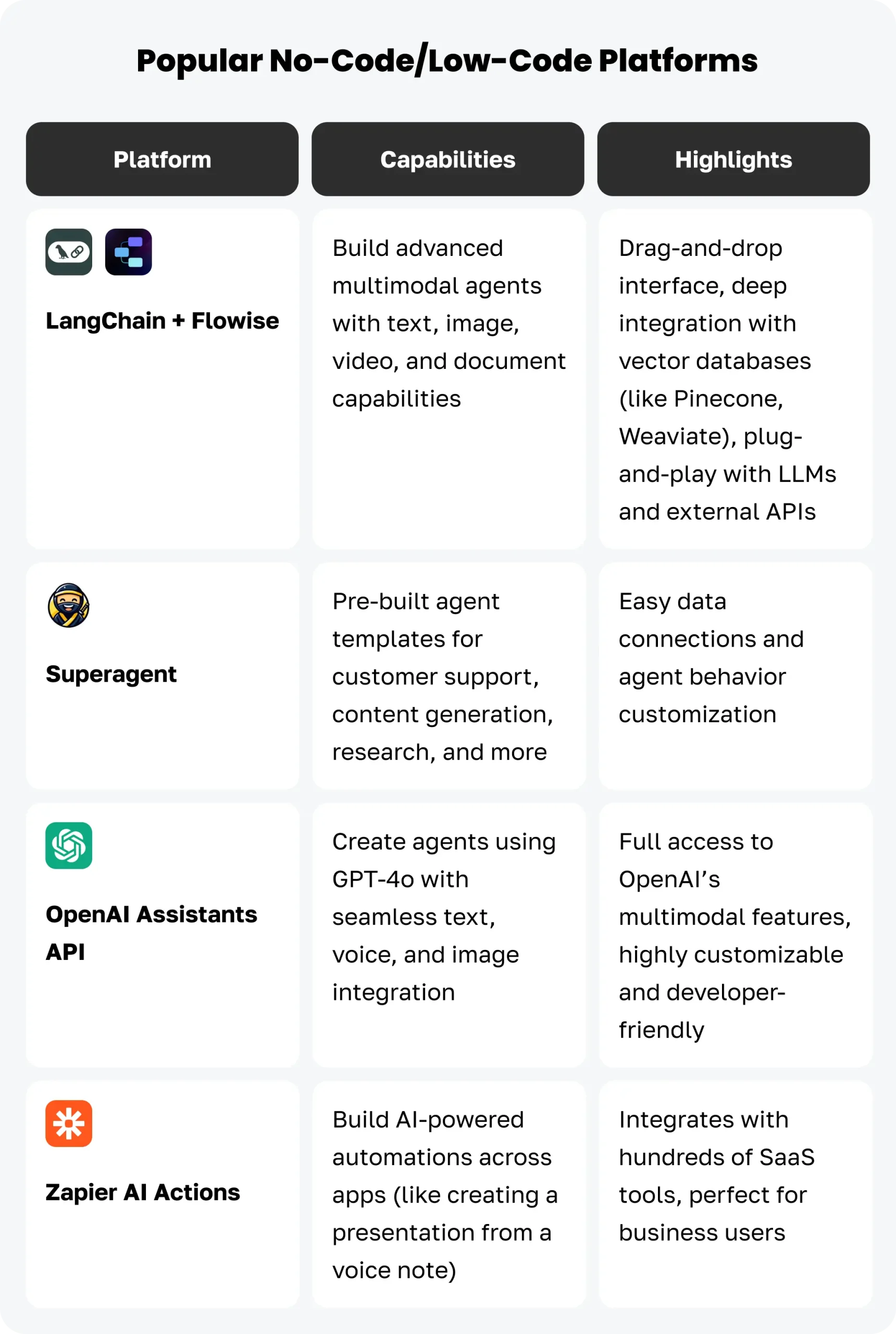

No-Code & Low-Code Builders: Multimodal AI for Everyone

In 2025, no-code and low-code builders for multimodal AI agents are gaining rapid momentum. These tools make advanced AI capabilities accessible even to teams without deep programming or machine learning expertise.

These platforms are “Lego kits” for building multimodal AI agents. Instead of writing complex code, you visually connect modular blocks — each handling a specific task such as text processing, image analysis, speech recognition, or database interaction.

How It Works:

- Choose Your Agent Type

Whether it’s a chatbot, research assistant, virtual concierge, or product assistant, you select the kind of AI agent you want to build. - Connect Data Sources

Upload documents, images, and videos, or connect external databases, cloud storage, and APIs (e.g., Notion, Slack, Airtable, Google Sheets, S3, CRMs). - Combine AI Models

Mix and match different text, image, audio, or video processing models. For example, your agent can analyze PDF reports, respond to voice commands, and search your knowledge base — all in one place. - Set Logic & Workflows

Define how the agent handles requests, including voice, visual, or text queries. - Deploy Your Agent

Launch it via a web app, integrate it into your website, mobile app, or internal system — many platforms let you go live with just a click.

Real-World Example:

Let’s say you want to build an AI shopping assistant for an e-commerce store:

- The agent can handle text, voice, and image queries — customers can upload photos, describe items verbally, or type in their preferences.

- It analyzes product images, searches your catalog, checks previous purchases, and even automatically adds products to the cart.

- With no coding, you can build such an agent in Flowise within hours by connecting your product database and GPT-powered models.

Why This Matters:

These platforms save development time and democratize multimodal AI, making it accessible to designers, marketers, product managers, and analysts alike.

You can launch experimental agents, test ideas, and integrate them into your product workflows in a single day.

Final Takeaway: The Future Belongs to the Bold

Let’s be clear — multimodal AI is not another fleeting trend or buzzword. It’s rapidly becoming the new foundation for digital product development. What we’re witnessing today is the beginning of a fundamental shift in how products are imagined, built, and improved.

The companies and teams that embrace this technology early will not only stay competitive but also redefine their industries. They will set the benchmarks for speed, personalization, and user experience, leaving slower adopters struggling to keep up.

If you’re serious about building AI-powered products, now is the time to act. Explore these platforms, experiment with their APIs, and discover how multimodal AI can supercharge your product development cycle.

Start small, but start now:

- Experiment with multimodal tools.

- Integrate AI into your existing product workflows.

- Iterate quickly and learn from every cycle.

- And most importantly — stay curious. The most innovative breakthroughs often come from unexpected places.

The future doesn’t wait for the cautious. It rewards those who move boldly and intentionally toward what’s next.