Sidekiq Batches with Sub-Batches. A Simple Way to Organize Code

Sidekiq Batches is a powerful feature in the Sidekiq job processing framework that allows you to process jobs in groups. This means you can bundle a set of…

Table of Contents

Sidekiq Batches is a powerful feature in the Sidekiq job processing framework that allows you to process jobs in groups. This means you can bundle a set of jobs together and execute them as a single unit, managing dependencies and making batch processing more efficient. In this article, I’ll share our journey of using this tool in a real project and how we developed a wrapper to optimize performance and reliability.

The Challenge

We had a project that required seamless synchronization between multiple systems. This is a common scenario where you need to sync a list of resources across different services, and naturally, we turned to Sidekiq for handling this background process.

Our process was straightforward:

- Retrieve a list of resources.

- Initiate synchronization for each resource.

This could also be done individually if only one resource needed synchronization.

Our Resources Included:

UniversitySync #University class

FacultySync #Faculties class

DepartmentsSync → DepartmentSync #Departments

CoursesSync → CourseSync #Courses

ProfessorsSync → ProfessorSync #Professors

StudentsSync → StudentSync #Students

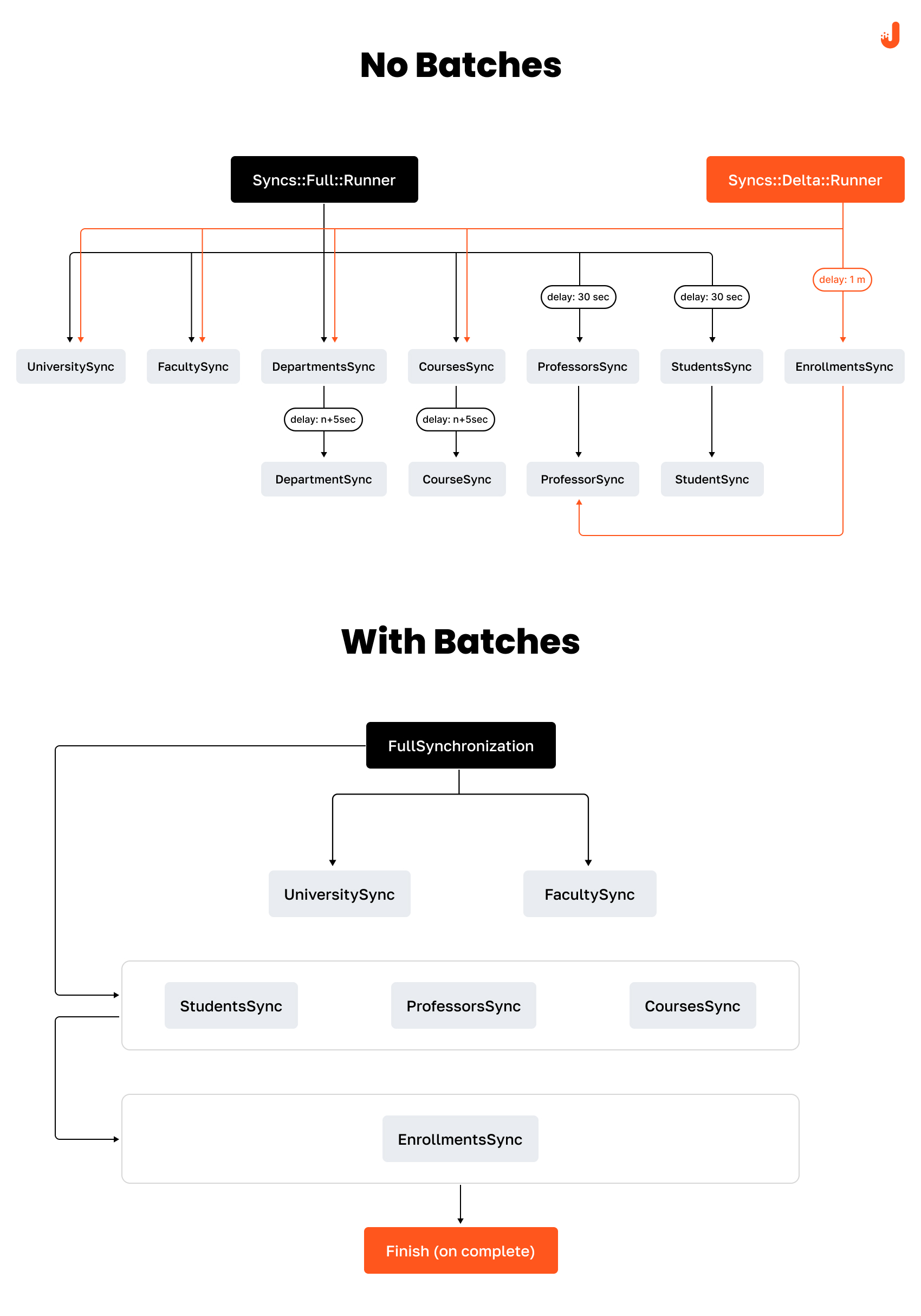

EnrollmentsSync → EnrollmentSync #EnrollmentsWhile these granular classes made it easy to scale the synchronization process across various resource types, we couldn’t synchronize all resources in parallel due to dependencies. For instance, Students needed to be synchronized before Enrollments, and both University and Faculties needed synchronization before any other resources.

Addressing Synchronization Challenges with Vanilla Sidekiq

Initially, we tried introducing delays between synchronizations. However, this method came with significant challenges:

- Unpredictability: Managing and maintaining delays was difficult, leading to potential inconsistencies when dependent resources synchronized before their parent resources.

- Inefficiency: Fixed delays often cause resources to wait idly, wasting processing time and resources.

- Unclear Completion: Defining the overall synchronization process’s completion point was challenging.

Leveraging Sidekiq Batches

When we discovered the Batches mechanism of the Sidekiq Pro and Enterprise versions, it seemed like a perfect fit for managing our dependencies. By combining work in a batch with sub-batches, we hoped to close the gaps described earlier.

Cons of Sidekiq Batches Code Organization

Despite its benefits, the default code organization in Sidekiq Batches had its downsides:

- Callbacks in One Class: While this theoretically simplified things, in practice, having a massive list of batches and sub-batches in one class created a mess. Separate steps in each class would have been better for testing.

- API Handling: Developers had to manage the batches’ API, initializing the parent batch and correctly wrapping sub-batches.

It looks like this:

def step2_done(status, options)

oid = options['oid']

overall = Sidekiq::Batch.new(status.parent_bid)

overall.jobs do

step3 = Sidekiq::Batch.new # <- Sub-batch

step3.on(:success, 'FulfillmentCallbacks#step3_done', 'oid' => oid)

step3.jobs do

G.perform_async(oid)

end

end

endImplementing a Simple Wrapper

To tackle these cons, our engineers developed a wrapper solution. This wrapper allows defining batches and sub-batches in one class, with callbacks close to the batch class, simplifying the process and enhancing performance and reliability.

Wrapper Requirements:

- Define batches and sub-batches in one class.

- Have callbacks for the batch near the batch class.

Here’s a basic example of the wrapper:

# @example basic batch with callback:

#

# module DoSomeWork

# class Batch

# def enqueue

# current_batch.description = "Doing some work"

# current_batch.on(:complete, Callback)

#

# jobs do

# SomeWorker.perform_async

# SomeWorker.perform_async

# end

# end

#

# class Callback

# def on_complete(status, options)

# logger.info "Success"

# end

# end

# end

# end

#

# Usage -> DoSomeWork::Batch.enqueue

module Batches

class Base

include Testing if Rails.env.test?

attr_reader :parent_batch, :current_batch

def initialize(parent_bid = nil)

@parent_batch = if parent_bid.present?

::Sidekiq::Batch.new(parent_bid)

else

NullBatch.new

end

@current_batch = ::Sidekiq::Batch.new

end

delegate :description=, :description, :bid, to: :current_batch

# @note Simple batch initialization when you don't want to define callbacks.

# @param [String] sync_run_id

# @param [String] parent_bid - should be passed if it's a sub-batch

# @return [Batches::Base]

def self.enqueue(sync_run_id:, parent_bid: nil)

batch = new(parent_bid)

batch.enqueue(sync_run_id)

batch

end

def enqueue(sync_run_id)

raise NotImplementedError

end

def on(*args)

current_batch.on(*args)

self

end

private

def jobs(&block)

parent_batch.jobs { current_batch.jobs(&block) }

end

end

endUsing the Wrapper for Batches and Sub-batches

module UniversityAndFaculty

class Batch < ::Base

def enqueue(run_id)

current_batch.description = "University/Faculty sync for #{run_id}"

current_batch.on(:complete, Callback, run_id: run_id)

jobs do

::Syncs::UniversitySyncWorker.perform_async(run_id)

::Syncs::FacultySyncWorker.perform_async(run_id)

end

end

end

class Callback

def on_complete(status, options)

# enqueue sub-batch

DepartmentsAndCourses::Batch.enqueue(

run_id: options['run_id'],

parent_bid: status.parent_bid

)

end

end

endWith this wrapper, our batches and sub-batch classes looked almost identical because the complexity of the Sidekiq Batch API was hidden in the Base class. This organization made our code cleaner, faster, and more reliable.

Summing up

Our journey to streamline the synchronization process led us to Sidekiq’s Batches feature, which provided a solid foundation but also came with its own set of challenges. Instead of letting these issues slow us down, our engineering team developed a wrapper that simplified batch and sub-batch definitions, making the code easier to manage and more reliable.

We hope our experience and solution inspire other Ruby developers facing similar issues. Our wrapper isn’t just a tool; it’s a testament to our commitment to writing efficient, effective code. Stay tuned to our blog for more stories and solutions from our engineering team.