Understanding AI Training Data: What It Is, How It Works, and Where to Find It

Learn how data powers AI, from collection and preparation to labeling. Also, understand important issues like privacy, bias, and sustainability.

Table of Contents

People generally make decisions based on their past experiences, which come from different situations and interactions. In simple terms, situations and people provide data that our minds use. As we gain more experience, our minds learn to make quick and smooth decisions.

This shows that data is essential for learning.

Just like a child needs to learn the alphabet to understand letters, a machine needs to learn to understand the data it receives. This is the main idea behind training Artificial Intelligence (AI). A machine is like a child that has not yet learned. It cannot tell the difference between a cat and a dog or a bus and a car because it has not seen or learned what they look like.

When people refer to “training” an AI, what does that actually mean? What are the mechanics behind it? Is it an automated process? The answer becomes more apparent when we consider how innovation and responsibility in AI development drive progress, ensuring that each new model is built ethically and robustly.

So let’s find out what AI training data is.

Key takeaways:

- AI learns patterns from examples, just like people use experiences to make decisions.

- Networks adjust internal weights through labeled examples, gradually improving accuracy.

- Collecting diverse, high-quality data and carefully labeling it is crucial for success.

- Bias, privacy, transparency, sustainability, and scaling are major hurdles to overcome.

- Bias-free, transparent systems that respect privacy must guide AI development.

- JetRuby optimizes data and code processes, ensuring accurate, efficient, and forward-looking AI solutions.

How Neural Networks Learn from Training Data

Every AI system uses a neural network of artificial neurons linked by numerical weights. These weights determine how strongly signals move between the neurons. Initially, the weights start as random values. The real work happens during training, where the network adjusts these weights by learning from labeled examples.

For example, if you want to teach a neural network to recognize cats in photos, you show it thousands of images — some with cats and some without. At first, the network makes guesses that are no better than random.

With each mistake, it changes its internal weights to lower errors, while correct guesses reinforce helpful connections. This ongoing adjustment, called backpropagation, is key to machine learning.

Training requires a lot of computer power, often using many servers together. However, once the network is fully trained, it can make quick decisions, like identifying a cat, using much less energy than during training. This efficiency is why well-trained AI models are so valuable; they take all the knowledge gained during training and can operate effectively at scale.

A key point is that the quality of training data matters a lot. If you feed the system poor data, it produces poor results. Consistent, well-labeled examples help the network identify real patterns instead of noise or biases.

To see how AI might evolve shortly, check out 14 exciting AI developments coming in 2025 to understand where this technology is heading.

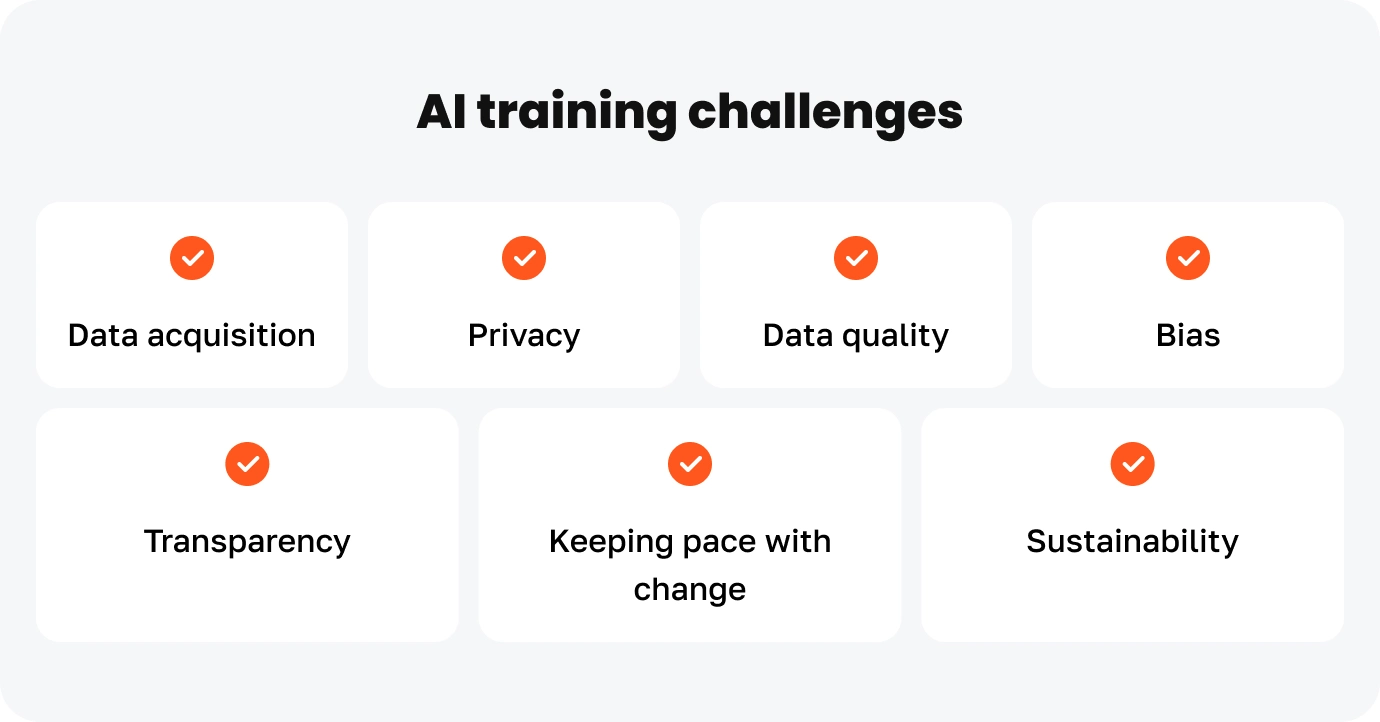

The most fundamental AI training data challenges

Building effective AI goes beyond advanced algorithms; it requires tackling key data challenges. Success hinges on addressing practical issues in how these systems learn.

The process is complex. What may seem like a technical problem often reveals deeper issues like ethics, operational limits, and environmental effects. These factors directly influence whether an AI system adds value or causes unintended issues.

Data acquisition

If training data is the foundation of AI development, then data acquisition is one of the most fundamental AI training challenges (exacerbating many of the other challenges). AI training requires vast amounts of good-quality, relevant data to deliver an AI application that meets users’ increasingly high expectations. Even before considering data quality, the first challenge is sourcing enough of it. Especially for niche applications, the required volume of data may not even exist. It may be challenging to acquire if it does due to privacy or legal restrictions.

Privacy

AI training often requires datasets that include personally identifiable information (PII) such as names, phone numbers, addresses, or sensitive information such as health data, financial records, or confidential business information. If you have no choice but to use such data, you must do so without compromising the privacy of individuals or organizations. This AI training challenge is ethical and legal since, beyond the principles of responsible AI, data protection laws must be complied with.

Data quality

The well-known concept of ‘garbage in, garbage out’ really does sum up the relationship between the quality of training data and the performance of your AI model. So how do you ensure you’re doing the opposite, namely ‘quality in, quality out’? This is one of the toughest AI training challenges because of the volume of data involved and the many aspects of AI training data quality.

Bias

As our separate blog on data quality makes clear, bias-free data quality is one of the most crucial characteristics of AI training. I’ve called it out here because it’s an essential and specific AI training challenge. If the training data includes biases, consciously or unconsciously, the AI model will likely replicate them in its predictions. For example, a facial recognition system trained on a dataset that predominantly features people of one ethnicity may struggle to recognise individuals of other nationalities accurately.

Transparency

Too often, AI applications have a black-box problem, making it impossible to understand how the AI model processes data, generates its output, or explains its decisions. This is a real problem for combating bias and perhaps the most critical AI training challenge to solve if we want people to trust AI.

Keeping pace with change

The more AI is embedded in our lives, the more important it becomes for applications to adapt to changing environments and learn from new data in real time. Otherwise, they will inevitably ‘fall behind’, producing predictions and decisions that become increasingly less relevant.

Sustainability

Training modern AI models takes a huge amount of time, computing power, and energy. This has obvious implications for the sustainability objectives of individual organizations and society in general.

AI training challenges? Or opportunities?

AI training challenges are not easy to solve. However, we should see each challenge as a chance to promote innovation. This innovation is crucial for developing AI that is ethical, effective, and beneficial to everyone.

Preparing Data for AI Model Training

Preparing data for training is a crucial step in developing AI. It involves careful work across several key steps. Well-organized and processed data affects how well the model works, so it’s important to pay close attention to detail during these processes:

Data Collection

Training effective AI requires a variety of datasets that cover different situations. When training samples lack diversity, the model’s ability is limited. For example, an image recognition system that only learns from pictures of still animals cannot accurately identify them when moving. Different applications have different data needs. When real data is missing or insufficient, using generated data can help.

Data Annotation

Accurate labeling of training samples is necessary for supervised learning to create reliable references. This means humans must identify and tag important features in datasets. They do this by outlining objects in images, categorizing text, or classifying audio samples. The labeling process requires skilled people and takes a lot of time and resources in AI development.

Data Validation

Quality assurance protocols must check that datasets are accurate by using both automated systems and human reviews. This step helps identify and fix different potential problems, including:

– Incorrect or inconsistent labeling

– Unrelated or duplicate samples

– Biases that distort representation

– Missing or incomplete data points

Data Preprocessing

Before training a model, we need to prepare the datasets by making several important adjustments:

1. Normalization: We should scale numerical features to fit into consistent ranges.

2. Cleaning: We must remove any corrupted or unusual samples.

3. Augmentation: We can artificially increase the dataset’s variety when needed.

4. Stratification: We need to ensure balanced representation across different categories.

To evaluate the model effectively, we should divide the finalized dataset into separate parts for training, validation, and testing. This separation helps avoid overfitting and allows us to accurately measure how well the model performs on new data.

Data preprocessing is crucial for how well an AI model performs. If there are mistakes in this step, it will lead to problems in the AI system once it is in use.

How is AI trained?

Once you have prepared your training data, you can begin the training process. This means using your data with AI algorithms that learn in specific ways. There are three main approaches to this, which are often combined.

- In supervised learning, an AI algorithm learns from labeled data. These labels show the output that the AI should produce. This process is similar to a teacher guiding a student. The model, acting like a student, learns from the examples given by the teacher.For instance, if you show an AI model images labeled ‘dog,’ it learns to recognize the features that make up a dog. Over time, the model improves its ability to identify an unlabeled image of a dog and correctly produce the output ‘dog.’

- In unsupervised learning, an AI model works with unlabelled data. It looks for patterns and structures in the data without any guidance. This type of learning is useful when we don’t know the outcomes or want the model to understand the data better than what is obvious or labeled by humans. We can uncover hidden patterns by letting the model group similar data or find unusual items.For example, an AI model using unsupervised learning might spot unusual patterns in patient health data that could signal potential health issues. This can help with early diagnosis and personalized treatment plans.

- In reinforcement learning, an AI model learns by trying different actions and getting feedback through rewards or punishments. This feedback helps the model understand the results of its actions and improve its decisions over time. A typical example is an AI model that learns to play a game. It plays many times and changes its strategy based on whether it wins (reward) or loses (punishment). Over time, it discovers what works well and what does not.

Understanding how AI is trained shows an important truth: good data leads to good decisions, whether by people or machines. The article explains the core steps of AI training: collecting, labeling, validating, and preparing data so models can learn effectively. It discusses significant challenges like privacy, bias, transparency, and sustainability. These issues are not just technical problems but also ethical and operational challenges.

To see how real-world AI pipelines are complemented by efficient development practices, explore how AI code review enhances Ruby on Rails projects. This shows how code optimization and AI training work together to deliver robust solutions.

JetRuby’s Approach to AI in Practice

JetRuby not only talks about AI – we use it. Their development strategy uses AI to solve many issues with training data mentioned in the article:

Detecting Anomalies & Ensuring Data Accuracy: JetRuby’s AI checks for and reports data problems in real time, building trust and reliability in their applications.

Quality Testing Automation (AiQA): They use machine learning to speed up and improve quality assurance processes—changing tests over time, finding defects more quickly, and lowering market risks.

Optimizing Coding with AI: By giving developers real-time suggestions, preventing errors, and improving workflows, JetRuby makes coding up to 40% faster, even in strict industries like HealthTech and FinTech.

In short, AI training needs high-quality, diverse, and well-organized data. JetRuby uses this data effectively with AI systems that improve development processes, ensure accuracy, and enhance long-term efficiency.

This mix of theory and practice makes JetRuby a leader in creating AI systems and using them to develop better software.